Video editing constantly requires editors to separate the wheat from the chaff. For example, an editor of a sports event may receive anything between 3 to 8 hours of raw footage and be asked to create a 5- to 10-minute highlight reel. It doesn’t sound easy: Creating a short reel from so many hours of footage is time-consuming. Even if the photographers logged down notes while shooting, the editor might not agree, or might feel a duty to see all the content for themselves. That’s a few hours of work even using x4 fast-forward. We’ll be talking here about how AI can speed up this process (this is not an infomercial: if you want to understand the details on our tech, check our infographic.)

The film we analyze

Days To Come from Vital Films is the sort of output produced by studios that are at the top of their game. Filming from multiple positions (ground and chopper), with multiple angles and different levels of zoom and other editing techniques, the crew captured the excitement of a wonderful day on the Aspen slopes (kudos to Vital Films for licensing the content under CC-BY). To give a sense of some of the reasons why this film is engaging, we applied our human-engagement predictive algorithms on this content.

To understand what we found, we strongly encourage you to watch the entire 6.5-minute film and our engagement-prediction.

Necessary technical details

To understand how these algorithms were applied to this specific film, you need to know three things:

What our algorithms find

1. Almost all the impressive high-altitude ski jumps are associated with peaks in the engagement-prediction graph. Not coincidentally, these are also sections of the film where oftentimes the editors drew attention to an interesting section by abruptly slowing down the normal speed. This shows a good agreement between algorithm outputs and the editors’ own priorities. Keep in mind that our algorithms were applied in a way that was insensitive to the presence of slow-motion effects (meaning they’d produce the same values per frame if presented at normal speed).

2. Some peaks that are not associated with high-altitude jumps are zoom-in shots with relatively high detail, including close-ups on skis, or focusing on an animal (3:14), or focusing on the ski lifts viewed against the mountain, or a rare shot of a group of skiers (4:08).

3. Even what seem like small changes can make a big difference. Zooming out from the ski lifts in 3:32 over 3 seconds produces an immediate drop in the magnitude of predicted engagement.

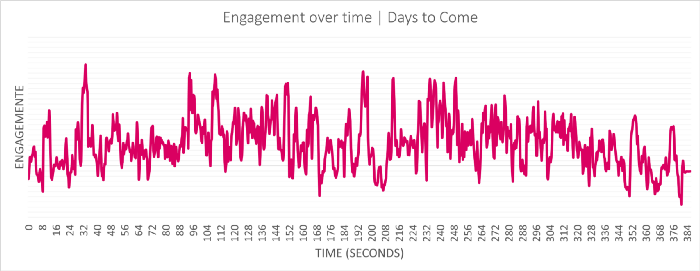

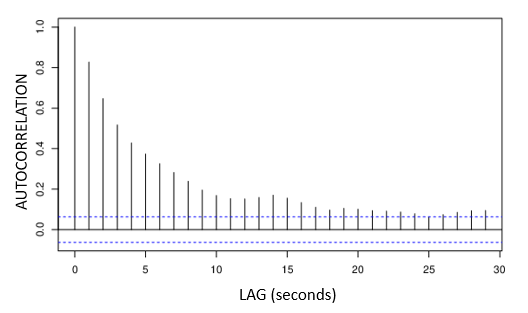

4. One last point, which is a bit geeky but worth the while (2-minute reading time), has to do with how this movie controls dynamics of engagement. Looking at the engagement-prediction graph, which we separate here, we have the intuitive sense that the level of engagement does not change abruptly each second. In other words, when engagement increases, it typically increases or stays high for a few more seconds, and when it decreases, it typically decreases or stays low for a few seconds. In other words, the time series is ‘smooth’. The magnitude of this smoothness can be measured by what is called an ‘autocorrelation function’, which quantifies how similar a time series is to its own lagged versions. For Days To Come, the analysis shows a strong auto-correlation up to around 10 seconds in the past. Mathematically, this means that knowing the current level of engagement makes it possible to guess, with above-chance accuracy, what would be the level of engagement 10 seconds from now.

But forget about the math; for purposes of understanding viewer-engagement, this means that the film drives slow changes in engagement-levels over time, no faster than, say, each 5 to 10 seconds. This is an interesting property that emerges from how the film was edited and contributes to what makes it effective.

Final thoughts

We hope this analysis showed how human-oriented AI can help editors perform their jobs faster and focus on what counts — putting together an interesting cut. One of the reasons that any editing job is expensive is because of the massive amount of work that goes into selecting content. Reducing this burden will allow editors to focus on their core business, and pass on savings and better results to clients.

You can download and analyze the engagement data used to construct these graphs (one data point each 400ms; freq=2.5Hz).